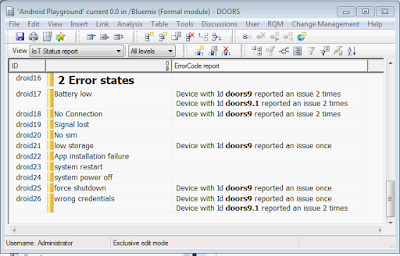

Last time I shown how easy it is to use historian from your IoT enabled device data in DOORS using IoT Foundation HTTP API. I thought 'it cannot be that hard to show real-time data', I know there are no MQTT callbacks in DXL, no JSON parser (sure we can write one, but what's the point?).

Sounds challenging. So let's do it!

Let's play!

If you have spare 15 minutes please have a look at wonderful presentation by Dr John Cohn (@johncohnvt) - "The importance of play".

Let's create a very simple HTML static page you can open in any (recent!) browser and which will connect to IoT Foundation.

Very (and I mean it) simple HTML page

The page will have just two p elements, one for static info, and one used dynamically from JavaScript. It will additionally include 3 scripts; jquery, paho and MQTT connection helper class.

The entire page code is simply:

<!DOCTYPE html>

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8" />

<script type="text/javascript" src="jquery.min.js"></script>

<title>MQTT listener</title>

</head>

<body onContextMenu="return false">

<p>Please do not close</p>

<p id="pStatus"></p>

</body>

<script type="text/javascript" src="realtime.js"></script>

<script type="text/javascript" src="mqttws31.js"></script>

<script type="text/javascript">

var realtime = new Realtime(org, apikey, apitoken, device_type, device_id);

</script>

</html>

MQTT connection

As you know IoT devices communicate over MQTT, lightweight device to device protocol implemented in many languages. Among which there are many JavaScript implementations available, which you can find at

http://mqtt.org/tag/javascript. Personally I'm using

Paho because it is very easy to use with IBM Bluemix services.

Using Paho is straight forward, you need to create new Messaging.Client object providing host, port and clientId.

For newly created client you should provide two message callbacks:

- onMessageArrived - function(msg)

- onConnectionLost - function(e)

client = new Messaging.Client(hostname, 8883,clientId);

client.onMessageArrived = function(msg) {

var topic = msg.destinationName;

var payload = JSON.parse(msg.payloadString);

data = payload.d;

};

client.onConnectionLost = function(e){

console.log("Connection Lost at " + Date.now() + " : " + e.errorCode + " : " + e.errorMessage);

this.connect(connectOptions);

}

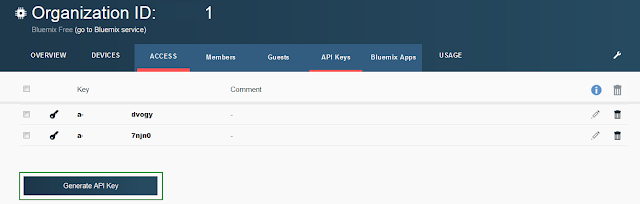

Then you construct connection options object which will be passed to connect function of Messaging.Client. This will start connection with IoT MQTT Broker.

You have to specify:

- timeout - number

- useSSL - boolean

- userName - string - as usual this will be API Key for your organization

- password - string - and API Token

- onSuccess - function() - executed when connection was successful

- onFailure - function(e) - execeuted on failure

var connectOptions = new Object();

connectOptions.keepAliveInterval = 3600;

connectOptions.useSSL=true;

connectOptions.userName=api_key;

connectOptions.password=auth_token;

connectOptions.onSuccess = function() {

console.log("MQTT connected to host: "+client.host+" port : "+client.port+" at " + Date.now());

$('#pStatus').text("MQTT connected to host: "+client.host+" port : "+client.port);

self.subscribeToDevice();

}

connectOptions.onFailure = function(e) {

console.log("MQTT connection failed at " + Date.now() + "\nerror: " + e.errorCode + " : " + e.errorMessage);

$('#pStatus').text("MQTT connection failed at " + Date.now() + "\nerror: " + e.errorCode + " : " + e.errorMessage);

}

console.log("about to connect to " + client.host);

$("#pStatus").text("about to connect to "+client.host);

client.connect(connectOptions);

Once you establish connection with IoT MQTT Broker you should subscribe to some topic.

var self = this;

// Subscribe to the device when the device ID is selected.

this.subscribeToDevice = function(){

var subscribeOptions = {

qos : 0,

onSuccess : function() {

console.log("subscribed to " + subscribeTopic);

},

onFailure : function(){

console.log("Failed to subscribe to " + subscribeTopic);

console.log("As messages are not available, visualization is not possible");

}

};

if(subscribeTopic != "") {

console.log("Unsubscribing to " + subscribeTopic);

client.unsubscribe(subscribeTopic);

}

subscribeTopic = "iot-2/type/" + deviceType + "/id/" + deviceId + "/evt/+/fmt/json";

client.subscribe(subscribeTopic,subscribeOptions);

}

DOORS9 HTML window

Now it's time to display this very simple page in DOORS 9:

bool onB4Navigate(DBE dbe,string URL,frame,body){return true}

void onComplete(DBE dbe, string URL){}

bool onError(DBE dbe, string URL, string frame, int error){return true};

void onProgress(DBE dbe, int percentage){return true}

Module refreshModuleReff = null

void moduleRefreshCB(DBE x) {

if (!null refreshModuleReff) {

refresh refreshModuleReff

}

}

string surl="c:\\iot\\realtime.html"

DB iotUIdb = create("do not close")

DBE iotUI=htmlView(iotUIdb, 100, 50, surl, onB4Navigate, onComplete, onError, onProgress)

DBE t = timer(iotUIdb, 1, moduleRefreshCB, "ping")

startTimer(t)

realize iotUIdb

You probably noticed I'm using timer functionality (page 48 in

DXL Reference Manual), that's because layout DXL is run when the module is being refreshed, redrawn, etc. This simple code ensures it is refreshed every second.

There is a lot of space for improvement in above DXL; you can add a toggle to check if user wants refresh, you can add noError/lastError block before calling

refresh refreshModuleReff.

Now save above code as %doors_home%/lib/dxl/startup/iot.dxl and restart DOORS. This will create a top level window which can be accessed from any DXL context in your DOORS 9 session.

Layout DXL

Finally we can add some worker code. This will be using hidden perm which has following syntax:

string hidden(DBE, string)

string property = obj."accel_property"

if (property != "none") {

noError()

refreshModuleReff = current Module

string strval = hidden(iotUI, "realtime." property "()")

string err = lastError

if (!null err) { halt }

real val = realOf strval

//enable blow if your Module is similar to the one from last post

//obj."iot_data" = val

display strval

}

I put whole code from "MQTT Connection" chapter into realtime.js and created additional helper methods to get required

this.yaw = function() {

if (data != null) return data.yaw;

return -101;

}

this.pitch = function() {

if (data != null) return data.pitch;

return -101;

}

this.roll = function() {

if (data != null) return data.roll;

return -101;

}

var data = null;

Now the module is displaying real-time data from a device connected to IoT Foundation. It has 1 second delay, maybe you want to synchronize data object when it's retrieved from realtime.js.

Well afterwards I didn't need to write a JSON parser in DXL!

Conclusions

DOORS reading IoT real-time data is easy and possible! It was even simpler then I thought initially ;)

Remember in this blog I'm just giving examples what can be done, it's up to you to extend it with unsubscribe, change device type and device depending on current module. There are many thing you might want to add.